..

If I’m not pure, at least my jewels are [1].

..

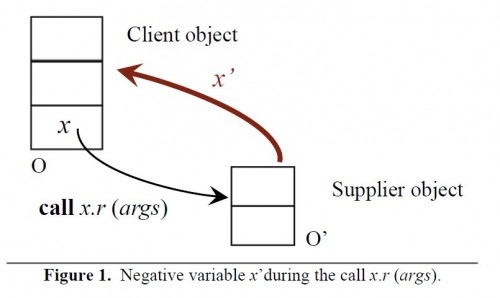

We often need to be reassured that a routine, usually a function, is “pure”, meaning that it does not change the state of the computation. For example, a function used in a contract element (precondition, postcondition, class invariant, loop invariant) should be purely descriptive, since it is a specification element; evaluating it, typically for testing and debugging, should not create a change of behavior — a “Heisenberg effect” — in the very computation that it is intended to assess. Another application is in a concurrency context, particularly in SCOOP (see earlier posts and forthcoming ones): if one or more functions are pure, several of their executions can run concurrently on the same object.

The notion of purity admits variants. The usual notion is what [2] calls weak purity, which precludes changes to previously existing objects but allow creating new objects. In the EiffelBase library we also encounter routines that have another form of purity, which we may call “relative” purity: they can leave the same state on exit as they found on entry, but in-between they might change the state. For the rest of this discussion we will rely on the standard notion of weak purity: no changes permitted on existing objects.

It is often suggested that the programming language should support specifying that a routine is pure; many people have indeed proposed the addition of a keyword such as pure to Eiffel. One of the reasons this is not — in my opinion — such a great idea is that purity is just a special case of the more general problem of framing: specifying and verifying what a routine does not change. If we can specify an arbitrary frame property, then we can, as a special case covered by the general mechanism, specify that a routine changes nothing.

To see why framing is so important, consider a class ACCOUNT with a routine deposit that has the postcondition

balance = old balance + sum………..— Where sum is the argument of deposit

Framing here means stating that nothing else than balance changes; for example the account’s owner and its number should remain the same. It is not practical to write all individual postcondition clauses such as

owner= old owner

number= old number

and so on. But we do need to specify these properties and enforce them, if only to avoid that a descendant class (maybe MAFIA_ACCOUNT) distort the rules defined by the original.

One technique is to add a so-called “modifies clause”, introduced by verification tools such as ESC-Java [3] and JML [4]. Modifies clauses raise some theoretical issues; in particular, the list of modified expressions is often infinite, so we must restrict ourselves to an abstract-data-type view where we characterize a class by commands and queries and the modifies clause only involves queries of the class. Many people find this hard to accept at first, since anything that is not talked about can change, but it is the right approach. A modifies clause of sorts, included in the postcondition, appeared in an earlier iteration of the Eiffel specification, with the keyword only (which is indeed preferable to modifies, which in the Eiffel style favoring grammatically simple keywords would be modify, since what we want to express is not that the routine must change anything at all but that it may only change certain specified results!). The convention worked well with inheritance, since it included the rule that a clause such as only balance, in class ACCOUNT, prescribes that the routine may not, in its modifies version as well as in any version redefined in descendants, change any other query known at the level of ACCOUNT; but a descendant version may change, subject to its own ACCOUNT clauses, any new query introduced by a descendant.

To declare a routine as pure, it would suffice to use an empty only clause (not very elegant syntactically — “only” what? — but one can get used to it).

This construct has been discarded, as it places too heavy a burden on the programmer-specifier. Here the key observation resulted from a non-scientific but pretty extensive survey I made of all the JML code I could get my hands on. I found that every time a query appeared in a modifies clause it was also listed in the postcondition! On reflection, this seems reasonable: if you are serious about specification, as anyone bothering to write such a clause surely is, you will not just express that something changes and stop there; you will write something about how it may change. Not necessarily the exact result, as in

my_query = precise_final_value

but at least some property of that result, as in

some_property (my_query)

This observation has, however, an inescapable consequence for language design: modifies or only clauses should be inferred by the compiler from the postcondition, not imposed on the programmer as an extra burden. The convention, which we may call the Implicit Framing Rule, is simple:

A routine may change the value of a query only if the query is specified in the routine’s postcondition

(or, if you like double negation, “no routine may change the value of a query other than those specified in its postcondition”). Here we say that a feature is “specified” in a postcondition if it appears there outside of the scope of an old expression. (Clearly, an occurrence such as old balance does not imply that balance can be modified, hence this restriction to occurrences outside of an old.)

With this convention the only clause becomes implicit: it would simply list all the queries specified in the postcondition, so there is no need for the programmer to write it. For the rare case of wanting to specify that a query q may change, but not wanting to specify how, it is easy to provide a library function, say involved, that always return true and can be used in postconditions, as in involved (q).

The convention is of course not just a matter of programming methodology but, in an IDE supporting verification, such as the EVE “Verification As a Matter Of Course” environment which we are building for Eiffel [5], the compiler will enforce the definition above — no change permitted to anything not specified in the postcondition — and produce an error in case of a violation. This also means that we can easily specify that a routine is pure: it must simply not specify any query in its postcondition. It may still list it in an old clause, as happens often in practice, e.g.

Result = old balance – Minimum_balance………..— In the postcondition of a function available_funds

Note the need to use old here. Apart from this addition of old to some postconditions, a considerable advantage of the convention is that most existing code using pure functions will be suitable to the new purity enforcement without any need to provide new annotations.

I believe that this is the only sustainable convention. It does not, of course, solve the frame problem by itself (for attempts in this direction see [6, 7]), but it is a necessary condition for a solution that is simple, easily taught, fairly easily implemented, and effective. It goes well with model-based specifications [8, 9], which I believe are the technique of most promise for usable specifications of object-oriented software. And it provides a straightforward, no-frills way to enforce purity where prescribed by the Command-Query Separation principle [10, 11]: if I’m not pure, at least my functions must be.

References

[1] From the lyrics of the aria Glitter and Be Gay in Leonard Bernstein’s Candide, text by Lillian Hellman and others. Youtube offers several performances, including by Diana Damrau (here) and Natalie Dessay (here) . For the text see e.g. here.

[2] Adam Darvas and Peter Müller: Reasoning About Method Calls in Interface Specifications, in Journal of Object Technology, Volume 5, no. 5, jUNE 2006, pages 59-85, doi:10.5381/jot.2006.5.5.a3, available here.

[3] C. Flanagan, K.R.M. Leino, M. Lillibridge, G. Nelson, J. B. Saxe and R. Stata: Extended static checking for Java, in PLDI 2002 (Programming Language Design and Implementation), pages 234–245, 2002.

[4] Gary Leavens et al.: Java Modeling Language, see references here.

[5] Julian Tschannen, Carlo A. Furia, Martin Nordio, and Bertrand Meyer: Verifying Eiffel Programs with Boogie, to appear in Boogie 2011, First International Workshop on Intermediate Verification Languages, Wroclaw, August 2011. See documentation about the EVE project on the project page.

[6] Ioannis Kassios: Dynamic Frames: Support for Framing, Dependencies and Sharing Without Restrictions, in Formal Methods 2006, eds. J. Misra, T. Nipkow and E. Sekerinski, Lecture Notes in Computer Science 4085, Springer Verlag, 2006, pages 268-283.

[7] Bernd Schoeller: Making Classes Provable through Contracts, Models and Frames, PhD thesis, ETH Zurich, 2007, available here

[8] Bernd Schoeller, Tobias Widmer and Bertrand Meyer: Making Specifications Complete Through Models, in Architecting Systems with Trustworthy Components, eds. Ralf Reussner, Judith Stafford and Clemens Szyperski, Lecture Notes in Computer Science, Springer-Verlag, 2006, available here.

[9] Nadia Polikarpova, Carlo Furia and Bertrand Meyer: Specifying Reusable Components, in Verified Software: Theories, Tools, Experiments (VSTTE ’10), Edinburgh, UK, 16-19 August 2010, Lecture Notes in Computer Science, Springer Verlag, 2010, available here.

[10] Bertrand Meyer: Object-Oriented Software Construction, first (1988) and second (1997) editions, Prentice Hall.

[11] Bertrand Meyer: Touch of Class: An Introduction to Programming Well, Using Objects and Contracts, Springer Verlag, 2009.

VN:F [1.9.10_1130]

Rating: 10.0/10 (7 votes cast)

VN:F [1.9.10_1130]

Rating: +3 (from 5 votes)

Informatics Europe

Informatics Europe LASER summer school

LASER summer school Propulsion Academy

Propulsion Academy