Science progresses through people taking advantage of others’ insights and inventions. One of the conditions that makes the game possible is that you acknowledge what you take. For the originator, it is rewarding to see one’s ideas reused, but frustrating when that happens without acknowledgment, especially when you are yourself punctilious about citing your own sources of inspiration.

I have started to record some concepts that are widely known and applied today and which I believe I originated in whole or in part, whether or not their origin is cited by those who took them. The list below is not complete and I may update it in the future. It is not a list of ideas I contributed, only of those fulfilling two criteria:

- Others have built upon them. (If there is an idea that I think is great but no one paid attention to it, the list does not include it.)

- They have gained wide visibility.

There is a narcissistic aspect to this exercise and if people want to dismiss it as just showing I am full of myself so be it. I am just a little tired of being given papers to referee that state that genericity was invented by Java, that no one ever thought of refactoring before agile methods, and so on. It is finally time to state some facts.

Facts indeed: I back every assertion by precise references. So if I am wrong — i.e. someone preceded me — the claims of precedence can be refuted; if so I will update or remove them. All articles by me cited in this note are available (as downloadable PDFs) on my publication page. (The page is up to date until 2018; I am in the process of adding newer publications.)

Post-publication note: I have started to receive some comments and added them in a Notes section at the end; references to those notes are in the format [A].

Final disclaimer (about the narcissistic aspect): the exercise of collecting such of that information was new for me, as I do not usually spend time reflecting on the past. I am much more interested in the future and definitely hope that my next contributions will eclipse any of the ones listed below.

Programming concepts: substitution principle

Far from me any wish to under-represent the seminal contributions of Barbara Liskov, particularly her invention of the concept of abstract data type on which so much relies. As far as I can tell, however, what has come to be known as the “Liskov Substitution Principle” is essentially contained in the discussion of polymorphism in section 10.1 of in the first edition (Prentice Hall, 1988) of my book Object-Oriented Software Construction (hereafter OOSC1); for example, “the type compatibility rule implies that the dynamic type is always a descendant of the static type” (10.1.7) and “if B inherits from A, the set of objects that can be associated at run time with an entity [generalization of variable] includes instances of B and its descendants”.

Perhaps most tellingly, a key aspect of the substitution principle, as listed for example in the Wikipedia entry, is the rule on assertions: in a proper descendant, keep the invariant, keep or weaken the precondition, keep or strengthen the postcondition. This rule was introduced in OOSC1, over several pages in section 11.1. There is also an extensive discussion in the article Eiffel: Applying the Principles of Object-Oriented Design published in the Journal of Systems and Software, May 1986.

The original 1988 Liskov article cited (for example) in the Wikipedia entry on the substitution principle says nothing about this and does not in fact include any of the terms “assertion”, “precondition”, “postcondition” or “invariant”. To me this absence means that the article misses a key property of substitution: that the abstract semantics remain the same. (Also cited is a 1994 Liskov article in TOPLAS, but that was many years after OOSC1 and other articles explaining substitution and the assertion rules.)

Liskov’s original paper states that “if for each object o1 of type S there is an object o2 of type T such that for all programs P defined in terms of T, the behavior of P is unchanged when o1 is substituted for oz, then S is a subtype of T.” As stated, this property is impossible to satisfy: if the behavior is identical, then the implementations are the same, and the two types are identical (or differ only by name). Of course the concrete behaviors are different: applying the operation rotate to two different figures o1 and o2, whose types are subtypes of FIGURE and in some cases of each other, will trigger different algorithms — different behaviors. Only with assertions (contracts) does the substitution idea make sense: the abstract behavior, as characterized by preconditions, postconditions and the class invariants, is the same (modulo respective weakening and strengthening to preserve the flexibility of the different version). Realizing this was a major step in understanding inheritance and typing.

I do not know of any earlier (or contemporary) exposition of this principle and it would be normal to get the appropriate recognition.

Software design: design patterns

Two of the important patterns in the “Gang of Four” Design Patterns book (GoF) by Gamma et al. (1995) are the Command Pattern and the Bridge Pattern. I introduced them (under different names) in the following publications:

- The command pattern appears in OOSC1 under the name “Undo-Redo” in section 12.2. The solution is essentially the same as in GoF. I do not know of any earlier exposition of the technique. See also notes [B] and [C].

- The bridge pattern appears under the name “handle technique” in my book Reusable Software: The Base Component Libraries (Prentice Hall, 1994). It had been described several years earlier in manuals for Eiffel libraries. I do not know of an earlier reference. (The second edition of Object-Oriented Software Construction — Prentice Hall, 1997, “OOSC2” –, which also describes it, states that a similar technique is described in an article by Josef Gil and Ricardo Szmit at the TOOLS USA conference in the summer of 1994, i.e. after the publication of Reusable Software.)

Note that it is pointless to claim precedence over GoF since that book explicitly states that it is collecting known “best practices”, not introducing new ones. The relevant questions are: who, pre-GoF, introduced each of these techniques first; and which publications does the GoF cites as “prior art” for each pattern. In the cases at hand, Command and Bridge, it does not cite OOSC1.

To be concrete: unless someone can point to an earlier reference, then anytime anyone anywhere using an interactive system enters a few “CTRL-Z” to undo commands, possibly followed by some “CTRL-Y” to redo them (or uses other UI conventions to achieve these goals), the software most likely relying on a technique that I first described in the place mentioned above.

Software design: Open-Closed Principle

Another contribution of OOSC1 (1988), section 2.3, reinforced in OOSC2 (1997) is the Open-Closed principle, which explained one of the key aspects of inheritance: the ability to keep a module both closed (immediately usable as is) and open to extension (through inheritance, preserving the basic semantics. I am mentioning this idea only in passing since in this case my contribution is usually recognized, for example in the Wikipedia entry.

Software design: OO for reuse

Reusability: the Case for Object-Oriented Design (1987) is, I believe, the first publication that clearly explained why object-oriented concepts were (and still are today — in Grady Booch’s words, “there is no other game in town”) the best answer to realize the goal of software construction from software components. In particular, the article:

- Explains the relationship between abstract data types and OO programming, showing the former as the theoretical basis for the latter. (The CLU language at MIT originated from Liskov’s pioneering work on abstract data types, but was not OO in the full sense of the term, missing in particular a concept of inheritance.)

- Shows that reusability implies bottom-up development. (Top-down refinement was the mantra at the time, and promoting bottom-up was quite a shock for many people.)

- Explains the role of inheritance for reuse, as a complement to Parnas’s interface-based modular construction with information hiding.

Software design: Design by Contract

The contribution of Design by Contract is one that is widely acknowledged so I don’t have any point to establish here — I will just recall the essentials. The notion of assertion goes back to the work of Floyd, Hoare and Dijkstra in the sixties and seventies, and correctness-by-construction to Dijktra, Gries and Wirth, but Design by Contract is a comprehensive framework providing:

- The use of assertions in an object-oriented context. (The notion of class invariant was mentioned in a paper by Tony Hoare published back in 1972.)

- The connection of inheritance with assertions (as sketched above). That part as far as I know was entirely new.

- A design methodology for quality software: the core of DbC.

- Language constructs carefully seamed into the fabric of the language. (There were precedents there, but in the form of research languages such as Alphard, a paper design only, not implemented, and Euclid.)

- A documentation methodology.

- Support for testing.

- Support for a consistent theory of exception handling (see next).

Design by Contract is sometimes taken to mean simply the addition of a few assertions here and there. What the term actually denotes is a comprehensive methodology with all the above components, tightly integrated into the programming language. Note in particular that preconditions and postconditions are not sufficient; in an OO context class invariants are essential.

Software design: exceptions

Prior to the Design by Contract work, exceptions were defined very vaguely, as something special you do outside of “normal” cases, but without defining “normal”. Design by Contract brings a proper perspective by defining these concepts precisely. This was explained in a 1987 article, Disciplined Exceptions ([86] in the list), rejected by ECOOP but circulated as a technical report; they appear again in detail in OOSC1 (sections 7.10.3 to 7.10.5).

Other important foundational work on exceptions, to which I know no real precursor (as usual I would be happy to correct any omission), addressed what happens to the outcome of an exception in a concurrent or distributed context. This work was done at ETH, in particular in the PhD theses of B. Morandi and A. Kolesnichenko, co-supervised with S. Nanz. See the co-authored papers [345] and [363].

On the verification aspect of exceptions, see below.

Software design: refactoring

I have never seen a discussion of refactoring that refers to the detailed discussion of generalization in both of the books Reusable Software (1994, chapter 3) and Object Success (Prentice Hall, 1995, from page 122 to the end of chapter 6). These discussions describe in detail how, once a program has been shown to work, it should be subject to a posteriori design improvements. It presents several of the refactoring techniques (as they were called when the idea gained traction several years later), such as moving common elements up in the class hierarchy, and adding an abstract class as parent to concrete classes ex post facto.

These ideas are an integral part of the design methodology presented in these books (and again in OOSC2 a few later). It is beyond me why people would present refactoring (or its history, as in the Wikipedia entry on the topic) without referring to these publications, which were widely circulated and are available for anyone to inspect.

Software design: built-in documentation and Single-Product principle

Another original contribution was the idea of including documentation in the code itself and relying on tools to extract the documentation-only information (leaving implementation elements aside). The idea, described in detail in OOSC1 in 1988 (sections 9.4 and 9.5) and already mentioned in the earlier Eiffel papers, is that code should be self-complete, containing elements of various levels of abstraction; some of them describe implementation, but the higher-level elements describe specification, and are distinguished syntactically in such a way that tools can extract them to produce documentation at any desired level of abstraction.

The ideas were later applied through such mechanisms as JavaDoc (with no credit as far as I know). They were present in Eiffel from the start and the underlying principles, in particular the “Single Product principle” (sometimes “Self-Documentation principle”, and also generalized by J. Ostroff and R. Paige as “Single-Model principle”). Eiffel is the best realization of these principles thanks to:

- Contracts (as mentioned above): the “contract view” of a class (called “short form” in earlier descriptions) removes the implementations but shows the relevant preconditions, postconditions and class invariants, given a precise and abstract specification of the class.

- Eiffel syntax has a special place for “header comments”, which describe high-level properties and remain in the contract view.

- Eiffel library class documentation has always been based on specifications automatically extracted from the actual text of the classes, guaranteeing adequacy of the documentation. Several formats are supported (including, from 1995 on, HTML, so that documentation can be automatically deployed on the Web).

- Starting with the EiffelCase tool in the early 90s, and today with the Diagram Tool of EiffelStudio, class structures (inheritance and client relationships) are displayed graphically, again in an automatically extracted form, using either the BON or UML conventions.

One of the core benefits of the Single-Product principle is to guard against what some of my publications called the “Dorian Gray” syndrome: divergence of an implementation from its description, a critical problem in software because of the ease of modifying stuff. Having the documentation as an integral part of the code helps ensure that when information at some level of abstraction (specification, design, implementation) changes, the other levels will be updated as well.

Crucial in the approach is the “roundtripping” requirement: specifiers or implementers can make changes in any of the views, and have them reflected automatically in the other views. For example, you can graphically draw an arrow between two bubbles representing classes B and A in the Diagram Tool, and the code of B will be updated with “inherit A”; or you can add this Inheritance clause textually in the code of class B, and the diagram will be automatically updated with an arrow.

It is important to note how contrarian and subversive these ideas were at the time of their introduction (and still to some extent today). The wisdom was that you do requirements then design then implementation, and that code is a lowly product entirely separate from specification and documentation. Model-Driven Development perpetuates this idea (you are not supposed to modify the code, and if you do there is generally no easy way to propagate the change to the model.) Rehabilitating the code (a precursor idea to agile methods, see below) was a complete change of perspective.

I am aware of no precedent for this Single Product approach. The closest earlier ideas I can think of are in Knuth’s introduction of Literate Programming in the early eighties (with a book in 1984). As in the Single-product approach, documentation is interspersed with code. But the literate programming approach is (as presented) top-down, with English-like explanations progressively being extended with implementation elements. The Single Product approach emphasizes the primacy of code and, in terms of the design process, is very much yoyo, alternating top-down (from the specification to the implementation) and bottom-up (from the implementation to the abstraction) steps. In addition, a large part of the documentation, and often the most important one, is not informal English but formal assertions. I knew about Literate Programming, of course, and learned from it, but Single-Product is something else.

Software design: from patterns to components

Karine Arnout’s thesis at ETH Zurich, resulting in two co-authored articles ([255] and [257], showed that contrary to conventional wisdom a good proportion of the classical design patterns, including some of the most sophisticated, can be transformed into reusable components (indeed part of an Eiffel library). The agent mechanism (see below) was instrumental in achieving that result.

Programming, design and specification concepts: abstract data types

Liskov’s and Zilles’s ground-breaking 1974 abstract data types paper presented the concepts without a mathematical specification, using programming language constructs instead. A 1976 paper (number [3] in my publication list, La Description des Structures de Données, i.e. the description of data structures) was as far as I know one of the first to present a mathematical formalism, as used today in presentations of ADTs. John Guttag was taking a similar approach in his PhD thesis at about the same time, and went further in providing a sound mathematical foundation, introducing in particular (in a 1978 paper with Jim Horning) the notion of sufficient completeness, to which I devoted a full article in this blog (Are My Requirements Complete?) about a year ago. My own article was published in a not very well known journal and in French, so I don’t think it had much direct influence. (My later books reused some of the material.)

The three-level description approach of that article (later presented in English for an ACM workshop in the US in 1981, Pingree Park, reference [28]) is not well known but still applicable, and would be useful to avoid frequent confusions between ADT specifications and more explicit descriptions.

When I wrote my 1976 paper, I was not aware of Guttag’s ongoing work (only of the Liskov and Zilles paper), so the use of a mathematical framework with functions and predicates on them was devised independently. (I remember being quite happy when I saw what the axioms should be for a queue.) Guttag and I both gave talks at a workshop organized by the French programming language interest group in 1977 and it was fun to see that our presentations were almost identical. I think my paper still reads well today (well, if you read French). Whether or not it exerted direct influence, I am proud that it independently introduced the modern way of thinking of abstract data types as characterized by mathematical functions and their formal (predicate calculus) properties.

Language mechanisms: genericity with inheritance

Every once in a while I get to referee a paper that starts “Generics, as introduced in Java…” Well, let’s get some perspective here. Eiffel from its introduction in 1985 combined genericity and inheritance. Initially, C++ users and designers claimed that genericity was not needed in an OO context and the language did not have it; then they introduced template. Initially, the designers of Java claimed (around 1995) that genericity was not needed, and the language did not have it; a few years later Java got generics. Initially, the designers of C# (around 1999) claimed that genericity was not needed, and the language did not have it; a few years later C# and .NET got generics.

Genericity existed before Eiffel of course; what was new was the combination with inheritance. I had been influenced by work on generic modules by a French researcher, Didier Bert, which I believe influenced the design of Ada as well; Ada was the language that brought genericity to a much broader audience than the somewhat confidential languages that had such a mechanism before. But Ada was not object-oriented (it only had modules, not classes). I was passionate about object-oriented programming (at a time when it was generally considered, by the few people who had heard of it as an esoteric, academic pursuit). I started — in the context of an advanced course I was teaching at UC Santa Barbara — an investigation of how the two mechanisms relate to each other. The results were a paper at the first OOPSLA in 1986, Genericity versus Inheritance, and the design of the Eiffel type system, with a class mechanism, inheritance (single and multiple), and genericity, carefully crafted to complement each other.

With the exception of a Trellis-Owl, a design from Digital Equipment Corporation also presented at the same OOPSLA (which never gained significant usage), there were no other OO languages with both mechanisms for several years after the Genericity versus Inheritance paper and the implementation of genericity with inheritance in Eiffel available from 1986 on. Eiffel also introduced, as far as I know, the concept of constrained genericity, the second basic mechanism for combining genericity with inheritance, described in Eiffel: The Language (Prentice Hall, 1992, section 10.8) and discussed again in OOSC2 (section 16.4 and throughout). Similar mechanisms are present in many languages today.

It was not always so. I distinctly remember people bringing their friends to our booth at some conference in the early nineties, for the sole purpose of having a good laugh with them at our poster advertising genericity with inheritance. (“What is this thing they have and no one else does? Generi-sissy-tee? Hahaha.”). A few years later, proponents of Java were pontificating that no serious language needs generics.

It is undoubtedly part of of the cycle of invention (there is a Schopenhauer citation on this, actually the only thing from Schopenhauer’s philosophy that I ever understood [D]) that people at some point will laugh at you; if it did brighten their day, why would the inventor deny them one of the little pleasures of life? But in terms of who laughs last, along the way C++ got templates, Java got generics, C# finally did too, and nowadays all typed OO languages have something of the sort.

Language mechanisms: multiple inheritance

Some readers will probably have been told that multiple inheritance is a bad thing, and hence will not count it as a contribution, but if done properly it provides a major abstraction mechanism, useful in many circumstances. Eiffel showed how to do multiple inheritance right by clearly distinguishing between features (operations) and their names, defining a class as a finite mapping between names and features, and using renaming to resolve any name clashes.

Multiple inheritance was made possible by an implementation innovation: discovering a technique (widely imitated since, including in single-inheritance contexts) to implement dynamic binding in constant time. It was universally believed at the time that multiple inheritance had a strong impact on performance, because dynamic binding implied a run-time traversal of the class inheritance structure, already bad enough for single inheritance where the structure is a tree, but prohibitive with multiple inheritance for which it is a directed acyclic graph. From its very first implementation in 1986 Eiffel used what is today known as a virtual table technique which guarantees constant-time execution of routine (method) calls with dynamic binding.

Language mechanisms: safe GC through strong static typing

Simula 67 implementations did not have automatic garbage collection, and neither had implementations of C++. The official excuse in the C++ case was methodological: C programmers are used to exerting manual control of memory usage. But the real reason was a technical impossibility resulting from the design of the language: compatibility with C precludes the provision of a good GC.

More precisely, of a sound and complete GC. A GC is sound if it will only reclaim unreachable objects; it is complete if it will reclaim all unreachable objects. With a C-based language supporting casts (e.g. between integers and pointers) and pointer arithmetic, it is impossible to achieve soundness if we aim at a reasonable level of completeness: a pointer can masquerade as an integer, only to be cast back into a pointer later on, but in the meantime the garbage collector, not recognizing it as a pointer, may have wrongly reclaimed the corresponding object. Catastrophe.

It is only possible in such a language to have a conservative GC, meaning that it renounces completeness. A conservative GC will treat as a pointer any integer whose value could possibly be a pointer (because it lies between the bounds of the program’s data addresses in memory). Then, out of precaution, the GC will refrain from reclaiming the objects at these addresses even if they appear unreachable.

This approach makes the GC sound but it is only a heuristics, and it inevitably loses completeness: every once in a while it will fail to reclaim some dead (unreachable) objects around. The result is a program with memory leaks — usually unacceptable in practice, particularly for long-running or continuously running programs where the leaks inexorably accumulate until the program starts thrashing then runs out of memory.

Smalltalk, like Lisp, made garbage collection possible, but was not a typed language and missed on the performance benefits of treating simple values like integers as a non-OO language would. Although in this case I do not at the moment have a specific bibliographic reference, I believe that it is in the context of Eiffel that the close connection between strong static typing (avoiding mechanisms such as casts and pointer arithmetic) and the possibility of sound and complete garbage collection was first clearly explained. Explained in particular around 1990 in a meeting with some of the future designers of Java, which uses a similar approach, also taken over later on by C#.

By the way, no one will laugh at you today for considering garbage collection as a kind of basic human right for programmers, but for a long time the very idea was quite sulfurous, and advocating it subjected you to a lot of scorn. Here is an extract of the review I got when I submitted the first Eiffel paper to IEEE Transactions on Software Engineering:

Systems that do automatic garbage collection and prevent the designer from doing his own memory management are not good systems for industrial-strength software engineering.

Famous last words. Another gem from another reviewer of the same paper:

I think time will show that inheritance (section 1.5.3) is a terrible idea.

Wow! I wish the anonymous reviewers would tell us what they think today. Needless to say, the paper was summarily rejected. (It later appeared in the Journal of Systems and Software — as [82] in the publication list — thanks to the enlightened views of Robert Glass, the founding editor.)

Language mechanisms: void safety

Void safety is a property of a language design that guarantees the absence of the plague of null pointer dereferencing.

The original idea came (as far as I know) from work at Microsoft Research that led to the design of a research language called C-omega; the techniques were not transferred to a full-fledged programming language. Benefiting from the existence of this proof of concept, the Eiffel design was reworked to guarantee void safety, starting from my 2005 ECOOP keynote paper (Attached Types) and reaching full type safety a few years later. This property of the language was mechanically proved in a 2016 ETH thesis by A. Kogtenkov.

Today all significant Eiffel development produces void-safe code. As far as I know this was a first among production programming languages and Eiffel remains the only production language to provide a guarantee of full void-safety.

This mechanism, carefully crafted (hint: the difficult part is initialization), is among those of which I am proudest, because in the rest of the programming world null pointer dereferencing is a major plague, threatening at any moment to crash the execution of any program that uses pointers of references. For Eiffel users it is gone.

Language mechanisms: agents/delegates/lambdas

For a long time, OO programming languages did not have a mechanism for defining objects wrapping individual operations. Eiffel’s agent facility was the first such mechanism or among the very first together the roughly contemporaneous but initially much more limited delegates of C#. The 1999 paper From calls to agents (with P. Dubois, M. Howard, M. Schweitzer and E. Stapf, [196] in the list) was as far as I know the first description of such a construct in the scientific literature.

Language mechanisms: concurrency

The 1993 Communications of the ACM paper on Systematic Concurrent Object-Oriented Programming [136] was certainly not the first concurrency proposal for OO programming (there had been pioneering work reported in particular in the 1987 book edited by Tokoro and Yonezawa), but it innovated in offering a completely data-race-free model, still a rarity today (think for example of the multi-threading mechanisms of dominant OO languages).

SCOOP, as it came to be called, was implemented a few years later and is today a standard part of Eiffel.

Language mechanisms: selective exports

Information hiding, as introduced by Parnas in his two seminal 1972 articles, distinguishes between public and secret features of a module. The first OO programming language, Simula 67, had only these two possibilities for classes and so did Ada for modules.

In building libraries of reusable components I realized early on that we need a more fine-grained mechanism. For example if class LINKED_LIST uses an auxiliary class LINKABLE to represent individual cells of a linked list (each with a value field and a “right” field containing a reference to another LINKABLE), the features of LINKABLE (such as the operation to reattach the “right” field) should not be secret, since LINKED_LIST needs them; but they should also not be generally public, since we do not want arbitrary client objects to mess around with the internal structure of the list. They should be exported selectively to LINKED_LIST only. The Eiffel syntax is simple: declare these operations in a clause of the class labeled “feature {LINKED_LIST}”.

This mechanism, known as selective exports, was introduced around 1989 (it is specified in full in Eiffel: The Language, from 1992, but was in the Eiffel manuals earlier). I think it predated the C++ “friends” mechanism which serves a similar purpose (maybe someone with knowledge of the history of C++ has the exact date). Selective exports are more general than the friends facility and similar ones in other OO languages: specifying a class as a friend means it has access to all your internals. This solution is too coarse-grained. Eiffel’s selective exports make it possible to define the specific export rights of individual operations (including attributes/fields) individually.

Language mechanisms and implementation: serialization and schema evolution

I did not invent serialization. As a student at Stanford in 1974 I had the privilege, at the AI lab, of using SAIL (Stanford Artificial Intelligence Language). SAIL was not object-oriented but included many innovative ideas; it was far ahead of its time, especially in terms of the integration of the language with (what was not yet called) its IDE. One feature of SAIL with which one could fall in love at first sight was the possibility of selecting an object and having its full dependent data structure (the entire subgraph of the object graph reached by following references from the object, recursively) stored into a file, for retrieval at the next section. After that, I never wanted again to live without such a facility, but no other language and environment had it.

Serialization was almost the first thing we implemented for Eiffel: the ability to write object.store (file) to have the entire structure from object stored into file, and the corresponding retrieval operation. OOSC1 (section 15.5) presents these mechanisms. Simula and (I think) C++ did not have anything of the sort; I am not sure about Smalltalk. Later on, of course, serialization mechanisms became a frequent component of OO environments.

Eiffel remained innovative by tackling the difficult problems: what happens when you try to retrieve an object structure and some classes have changed? Only with a coherent theoretical framework as provided in Eiffel by Design by Contract can one devise a meaningful solution. The problem and our solutions are described in detail in OOSC2 (the whole of chapter 31, particularly the section entitled “Schema evolution”). Further advances were made by Marco Piccioni in his PhD thesis at ETH and published in joint papers with him and M. Oriol, particularly [352].

Language mechanisms and implementation: safe GC through strong static typing

Simula 67 (if I remember right) did not have automatic garbage collection, and neither had C++ implementations. The official justification in the case of C++ was methodological: C programmers are used to exerting manual control of memory usage. But the real obstacle was technical: compatibility with C makes it impossible to have a good GC. More precisely, to have a sound and complete GC. A GC is sound if it will only reclaim unreachable objects; it is complete if it will reclaim all unreachable objects. With a C-based language supporting casts (e.g. between integers and pointers) and pointer arithmetic, it is impossible to achieve soundness if we aim at a reasonable level of completeness: a pointer can masquerade as an integer, only to be cast back into a pointer later on, but in the meantime the garbage collector, not recognizing it as a pointer, may have wrongly reclaimed the corresponding object. Catastrophe. It is only possible in such a language to have a conservative GC, which will treat as a pointer any integer whose value could possibly be a pointer (because its value lies between the bounds of the program’s data addresses in memory). Then, out of precaution, it will not reclaim the objects at the corresponding address. This approach makes the GC sound but it is only a heuristics, and it may be over-conservative at times, wrongly leaving dead (i.e. unreachable) objects around. The result is, inevitably, a program with memory leaks — usually unacceptable in practice.

Smalltalk, like Lisp, made garbage collection possible, but was not a typed language and missed on the performance benefits of treating simple values like integers as a non-OO language would. Although in this case I do not at the moment have a specific bibliographic reference, I believe that it is in the context of Eiffel that the close connection between strong static typing (avoiding mechanisms such as casts and pointer arithmetic) and the possibility of sound and complete garbage collection was first clearly explained. Explained in particular to some of the future designers of Java, which uses a similar approach, also taken over later on by C#.

By the way, no one will laugh at you today for considering garbage collection as a kind of basic human right for programmers, but for a long time it was quite sulfurous. Here is an extract of the review I got when I submitted the first Eiffel paper to IEEE <em>Transactions on Software Engineering:

Software engineering: primacy of code

Agile methods are widely and properly lauded for emphasizing the central role of code, against designs and other non-executable artifacts. By reading the agile literature you might be forgiven for believing that no one brought up that point before.

Object Success (1995) makes the argument very clearly. For example, chapter 3, page 43:

Code is to our industry what bread is to a baker and books to a writer. But with the waterfall code only appears late in the process; for a manager this is an unacceptable risk factor. Anyone with practical experience in software development knows how many things can go wrong once you get down to code: a brilliant design idea whose implementation turns out to require tens of megabytes of space or minutes of response time; beautiful bubbles and arrows that cannot be implemented; an operating system update, crucial to the project which comes five weeks late; an obscure bug that takes ages to be fixed. Unless you start coding early in the process, you will not be able to control your project.

Such discourse was subversive at the time; the wisdom in software engineering was that you need to specify and design a system to death before you even start coding (otherwise you are just a messy “hacker” in the sense this word had at the time). No one else in respectable software engineering circles was, as far as I know, pushing for putting code at the center, the way the above extract does.

Several years later, agile authors started making similar arguments, but I don’t know why they never referenced this earlier exposition, which still today I find not too bad. (Maybe they decided it was more effective to have a foil, the scorned Waterfall, and to claim that everyone else before was downplaying the importance of code, but that was not in fact everyone.)

Just to be clear, Agile brought many important ideas that my publications did not anticipate; but this particular one I did.

Software engineering: the roles of managers

Extreme Programming and Scrum have brought new light on the role of managers in software development. Their contributions have been important and influential, but here too they were for a significant part prefigured by a long discussion, altogether two chapters, in Object Success (1995).

To realize this, it is enough to read the titles of some of the sections in those chapters, describing roles for managers (some universal, some for a technical manager): “risk manager”, “interface with the rest of the world” (very scrummy!), “protector of the team’s sanity”, “method enforcer” (think Scrum Master), “mentor and critic”. Again, as far as I know, these were original thoughts at the time; the software engineering literature for the most part did not talk about these issues.

Software engineering: outsourcing

As far as I know the 2006 paper Offshore Development: The Unspoken Revolution in Software Engineering was the first to draw attention, in the software engineering community, to the peculiar software engineering challenges of distributed and outsourced development.

Software engineering: automatic testing

The AutoTest project (with many publications, involving I. Ciupa, A. Leitner, Y. Wei, M. Oriol, Y. Pei, M. Nordio and others) was not the first to generate tests automatically by creating numerous instances of objects and calling applicable operations (it was preceded by Korat at MIT), but it was the first one to apply this concept with Design by Contract mechanisms (without which it is of little practical value, since one must still produce test oracles manually) and the first to be integrated in a production environment (EiffelStudio).

Software engineering: make-less system building

One of the very first decisions in the design of Eiffel was to get rid of Make files.

Feldman’s Make had of course been a great innovation. Before Make, programmers had to produce executable systems manually by executing sequences of commands to compile and link the various source components. Make enabled them to instead to define dependencies between components in a declarative way, resulting in a partial order, and then performed a topological sort to produce the sequence of comments. But preparing the list of dependencies remains a tedious task, particularly error-prone for large systems.

I decided right away in the design of Eiffel that we would never force programmers to write such dependencies: they would be automatically extracted from the code, through an exhaustive analysis of the dependencies between modules. This idea was present from the very the first Eiffel report in 1985 (reference [55] in the publication list): Eiffel programmers never need to write a Make file or equivalent (other than for non-Eiffel code, e.g. C or C++, that they want to integrate); they just click a Compile button and the compiler figures out the steps.

Behind this approach was a detailed theoretical analysis of possible relations between modules in software development (in many programming languages), published as the “Software Knowledge Base” at ICSE in 1985. That analysis was also quite instructive and I would like to return to this work and expand it.

Educational techniques: objects first

Towards an Object-Oriented Curriculum ( TOOLS conference, August 1993, see also the shorter JOOP paper in May of the same year) makes a carefully argued case for what was later called the Objects First approach to teaching programming. I would be interested to know if there are earlier publications advocating starting programming education with an OO language.

The article also advocated for the “inverted curriculum”, a term borrowed from work by Bernie Cohen about teaching electrical engineering. It was the first transposition of this concept to software education. In the article’s approach, students are given program components to use, then little by little discover how they are made. This technique met with some skepticism and resistance since the standard approach was to start from the very basics (write trivial programs), then move up. Today, of course, many introductory programming courses similarly provide students from day one with a full-fledged set of components enabling them to produce significant programs.

More recent articles on similar topics, taking advantage of actual teaching experience, are The Outside-In Method of Teaching Programming (2003) and The Inverted Curriculum in Practice (at ICSE 2006, with Michela Pedroni). The culmination of that experience is the textbook Touch of Class from 2009.

Educational techniques: Distributed Software Projects

I believe our team at ETH Zurich (including among others M. Nordio, J. Tschannen, P. Kolb and C. Estler and in collaboration with C. Ghezzi, E. Di Nitto and G. Tamburrelli at Politecnico di Milano, N. Aguirre at Rio Cuarto and many others in various universities) was the first to devise, practice and document on a large scale (see publications and other details here) the idea of an educational software project conducted in common by student groups from different universities. It yielded a wealth of information on distributed software development and educational issues.

Educational techniques: Web-based programming exercises

There are today a number of cloud-based environments supporting the teaching of programming by enabling students to compile and test their programs on the Web, benefiting from a prepared environment (so that they don’t have to download any tools or prepare control files) and providing feedback. One of the first — I am not sure about absolute precedence — and still a leading one, used by many universities and applicable to many programming languages, is Codeboard.

The main developer, in my chair at ETH Zurich, was Christian Estler, supported in particular by M. Nordio and M. Piccioni, so I am only claiming a supporting role here.

Educational techniques: key CS/SE concepts

The 2001 paper Software Engineering in the Academy did a good job, I think, of defining the essential concepts to teach in a proper curriculum (part of what Jeannette Wing’s 2006 paper called Computational Thinking).

Program verification: agents (delegates etc.)

Reasoning about Function Objects (ICSE 2010, with M. Nordio, P. Müller and J. Tschannen) introduced verification techniques for objects representing functions (such as agents, delegates etc., see above) in an OO language. Not sure whether there were any such techniques before.

Specification languages: Z

The Z specification language has been widely used for formal development, particularly in the UK. It is the design of J-R Abrial. I may point out that I was a coauthor of the first publication on Z in English (1980), describing a version that preceded the adaptation to a more graphical-style notation done later at Oxford. The first ever published description of Z, pertaining to an even earlier version, was in French, in my book Méthodes de Programmation (with C. Baudoin), Eyrolles, 1978, running over 15 pages (526-541), with the precise description of a refinement process.

Program verification: exceptions

Largely coming out of the PhD thesis of Martin Nordio, A Sound and Complete Program Logic for Eiffel (TOOLS 2009) introduces rules for dealing with exceptions in a Hoare-style verification framework.

Program verification: full library, and AutoProof

Nadia Polikarpova’s thesis at ETH, aided by the work of Carlo Furia and Julian Tschannen (they were the major contributors and my participation was less important), was as far as I know the first to produce a full functional verification of an actual production-quality reusable library. The library is EiffelBase 2, covering fundamental data structures.

AutoProof — available today, as a still experimental tool, through its Web interface, see here — relied on the AutoProof prover, built by the same team, and itself based on Microsoft Research’s Boogie and Z3 engines.

More

There are more concepts worthy of being included here, but for today I will stop here.

Notes

[A] One point of divergence between usual presentations of the substitution principle and the view in OOSC and my other publications is the covariance versus contravariance of routine argument types. It reflects a difference of views as to what the proper policy (both mathematically sound and practically usable) should be.

[B] The GoF book does not cite OOSC for the command or bridge patterns. For the command pattern it cites (thanks to Adam Kosmaczewski for digging up the GoF text!) a 1985 SIGGRAPH paper by Henry Lieberman (There’s More to Menu Systems than Meets the Screen). Lieberman’s paper describes the notion of command object and mentions undoing in passing, but does not include the key elements of the command pattern (as explained in full in OOSC1), i.e. an abstract (deferred) command class with deferred procedures called (say) do_it and undo_it, then specific classes for each kind of command, each providing a specific implementation of those procedures, then a history list of commands supporting multiple-level undo and redo as explained in OOSC1. (Reading Lieberman’s paper with a 2021 perspective shows that it came tantalizingly close to the command pattern, but doesn’t get to it. The paper does talk about inheritance between command classes, but only to “define new commands as extensions to old commands”, not in the sense of a general template that can be implemented in many specific ways. And it does mention a list of objects kept around to enable recovery from accidental deletions, and states that the application can control its length, as is the case with a history list; but the objects in the list are not command objects, they are graphical and other objects that have been deleted.)

[C] Additional note on the command pattern: I vaguely remember seeing something similar to the OOSC1 technique in an article from a supplementary volume of the OOPSLA proceedings in the late eighties or early nineties, i.e. at the same time or slightly later, possibly from authors from Xerox PARC, but I have lost the reference.

[D] Correction: I just checked the source and learned that the actual Schopenhauer quote (as opposed to the one that is usually quoted) is different; it does not include the part about laughing. So much for my attempts at understanding philosophy.

VN:F [1.9.10_1130]

Rating: 8.7/10 (26 votes cast)

VN:F [1.9.10_1130]

Rating: +7 (from 13 votes)

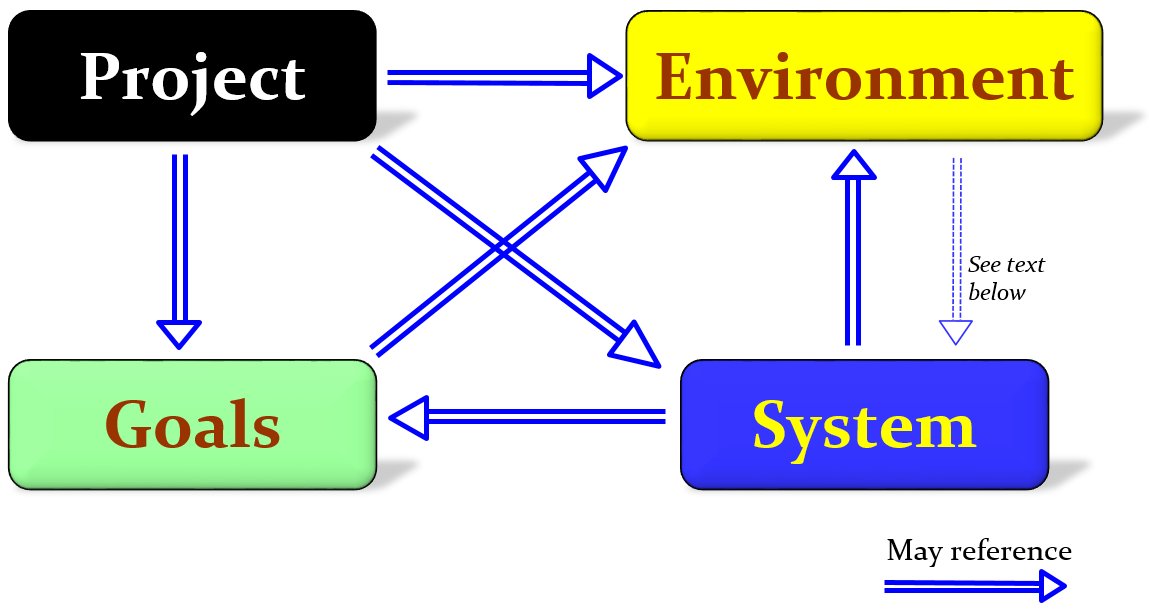

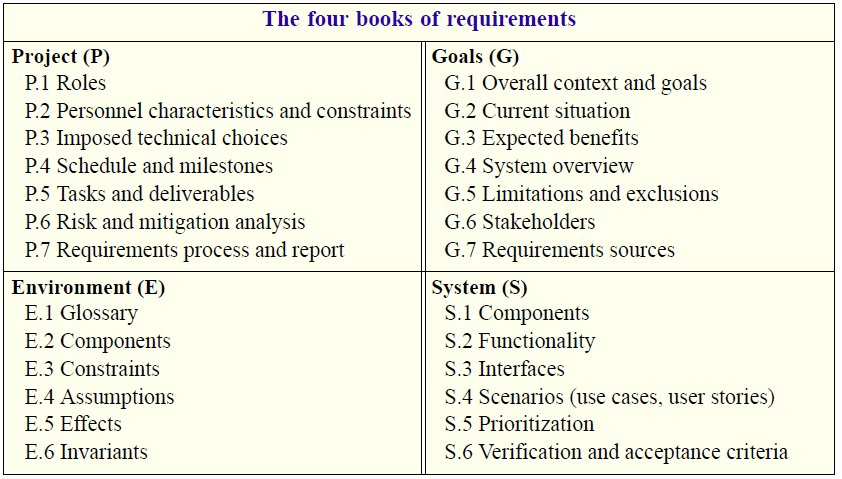

The Handbook introduces a comprehensive view of requirements including four elements or PEGS: Project, Environment, Goals and System. One of its principal contributions is the definition of a standard plan for requirements documents, consisting of the four corresponding books and replacing the obsolete IEEE 1998 structure.

The Handbook introduces a comprehensive view of requirements including four elements or PEGS: Project, Environment, Goals and System. One of its principal contributions is the definition of a standard plan for requirements documents, consisting of the four corresponding books and replacing the obsolete IEEE 1998 structure.

Informatics Europe

Informatics Europe LASER summer school

LASER summer school Propulsion Academy

Propulsion Academy