EiffelStudio 6.8, released last month, contains the first official implementation of the SCOOP programming model for concurrent programming. This is an important milestone; let me try to explain why.

Concurrency challenging us

Concurrency is the principal stumbling block in the progress of programming. Do not take just my word for it:

- Intel: “Multi-core processing is taking the industry on a fast-moving and exciting ride into profoundly new territory. The defining paradigm in computing performance has shifted inexorably from raw clock speed to parallel operations and energy efficiency” [1].

- Rick Rashid (head of Microsoft Research): “Multicore processors represent one of the largest technology transitions in the computing industry today, with deep implications for how we develop software.” [2].

- Bill Gates: “Multicore: This is the one which will have the biggest impact on us. We have never had a problem to solve like this. A breakthrough is needed in how applications are done on multicore devices.” [3]

- David Patterson: “Industry has basically thrown a Hail Mary. The whole industry is betting on parallel computing. They’ve thrown it, but the big problem is catching it.” [4]

- Gordon Bell: “I’m skeptical until I see something that gives me some hope… the machines are here and we haven’t got it right.” [4].

What has happened? Concurrency used to be a highly specialized domain of interest to a small minority of programmers building operating systems and networking systems and database engines. Just about everyone else could live comfortably pretending that the world was sequential. And then suddenly we all need to be aware of concurrency. The principal reason is the end of Moore’s law as we know it [5].

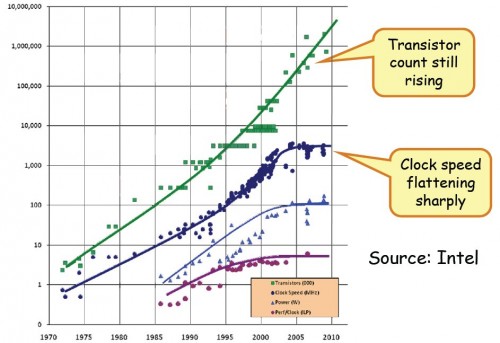

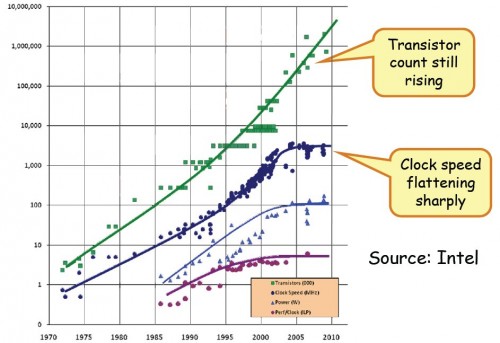

This chart show that we can no longer rely on the automatic and regular improvement to our programs’ performance, roughly by a factor of two every two years, thanks to faster chips. The free lunch is over; continued performance increases require taking advantage of concurrency, in particular through multithreading.

Performance is not the only reason for getting into concurrency. Another one is user convenience: ever since the first browser showed that one could write an email and load a Web page in the same window, users have been clamoring for multithreaded applications. Yet another source of concurrency requirements is the need to produce Internet and Web applications.

How do programmers write these applications? The almost universal answer relies on threading mechanisms, typically offered through some combination of language and library mechanisms: Java Threads, .NET threading, POSIX threads, EiffelThreads. The underlying techniques are semaphores and mutexes: nineteen-sixties vintage concepts, rife with risks of data races (access conflicts to a variable or resource, leading to crashes or incorrect computations) and deadlocks (where the system hangs). These risks are worse than the classical bugs of sequential programs because they are very difficult to detect through testing.

Ways to tame the beast

Because the need is so critical, the race is on — a “frantic” race in the words of a memorable New York Times article by John Markoff [4] — to devise a modern programming framework that will bring concurrent programming under control. SCOOP is a contender in this battle. In this post and the next I will try to explain why we think it is exactly what the world needs to tame concurrency.

The usual view, from which SCOOP departs, is that concurrent programming is intrinsically hard and requires a fundamental change in the way programmers think. Indeed some of the other approaches that have attracted attention imply radical departures from accepted programming paradigm:

- Concurrency calculi such as CSP [6, 7], CCS [8] and the π-Calculus [9] define high-level mathematical frameworks addressing concurrency, but they are very far from the practical concerns of programmers. An even more serious problem is that they focus on only some aspects of programming, but being concurrent is only one property of a program, among many others (needing a database, relying on graphical user interface, using certain data structures, perform certain computations…). We need mechanisms that integrate concurrency with all the other mechanisms that a program uses.

- Functional programming languages have also offered interesting idioms for concurrency, taking advantage of the non-imperative nature of functional programming. Advocacy papers have argued for Haskell [10 and Erlang [11] in this role. But should the world renounce other advances of modern software engineering, in particular object-oriented programming, for the sake of these mechanisms? Few people are prepared to take that step, and (as I have discussed in a detailed article [12]) the advantages of functional programming are counter-balanced by the superiority of the object-oriented model in its support for the modular construction of realistic systems.

What if we did not have to throw away everything and relearn programming from the ground up for concurrency? What if we could retain the benefits of five decades of software progress, as crystallized in modern object-oriented programming? This is the conjecture behind SCOOP: that we can benefit from all the techniques we have learned to make our software reliable, extendible and reusable, and add concurrency to the picture in an incremental way.

From sequential to concurrent

A detailed presentation of SCOOP will be for next Monday, but let me give you a hint and I hope whet your appetite by describing how to move a typical example from sequential to concurrent. Here is a routine for transferring money between two accounts:

transfer (amount: INTEGER ; source, target: ACCOUNT)

-- Transfer amount dollars from source to target.

require

enough: source·balance >= amount

do

source·withdraw (amount)

target·deposit (amount)

ensure

removed: source·balance = old source·balance – amount

added: target·balance = old target·balance + amount

end

The caller must satisfy the precondition, requiring the source account to have enough money to withdraw the requested amount; the postcondition states that the source account will then be debited, and the target account credited, by that amount.

Now assume that we naïvely apply this routine in a concurrent context, with concurrent calls

if acc1·balance >= 100 then transfer (acc1, acc2, 100) end

and

if acc1·balance >= 100 then transfer (acc1, acc3, 100) end

If the original balance on acc1 is 100, it would be perfectly possible in the absence of a proper concurrency mechanism that both calls, as they reach the test acc1·balance >= 100, find the property to be true and proceed to do the transfer — but incorrectly since they cannot both happen without bringing the balance of acc1 below zero, a situation that the precondition of transfer and the tests were precisely designed to rule out. This is the classic data race. To avoid it in the traditional approaches, you need complicated and error-prone applications of semaphores or conditional critical regions (the latter with their “wait-and-signal” mechanism, just as clumsy and low-level as the operations on semaphores).

In SCOOP, such data races, and data races of any other kind, cannot occur. If the various objects involved are to run in separate threads of control, the declaration of the routine will be of the form

transfer (amount: INTEGER ; source, target: separate ACCOUNT)

-- The rest of the routine exactly as before.

where separate is the only specific language keyword of SCOOP. This addition of the separate marker does the trick. will result in the following behavior:

- Every call to transfer is guaranteed exclusive access to both separate arguments (the two accounts).

- This simultaneous reservation of multiple objects (a particularly tricky task when programmers must take care of it through their own programs, as they must in traditional approaches) is automatically guaranteed by the SCOOP scheduler. The calls wait as needed.

- As a consequence, the conditional instructions (if … then… ) are no longer needed. Just call transfer and rely on SCOOP to do the synchronization and guarantee correctness.

- As part of this correctness guarantee, the calls may have to wait until the preconditions hold, in other words until there is enough money on the account.

This is the desired behavior in the transition from sequential to concurrent. It is achieved here not by peppering the code with low-level concurrent operations, not by moving to a completely different programming scheme, but by simply declaring which objects are “separate” (potentially running elsewhere.

The idea of SCOOP is indeed that we reuse all that we have come to enjoy in modern object-oriented programming, and simply declare what needs to be parallel, expecting things to work (“principle of least surprise”).

This is not how most of the world sees concurrency. It’s supposed to be hard. Indeed it is; very hard, in fact. But the view of the people who built SCOOP is that as much of the difficulty should be for the implementers. Hence the title of this article: for programmers, concurrency should be easy. And we think SCOOP demonstrates that it can be.

SCOOP in practice

A few words of caution: we are not saying that SCOOP as provided in EiffelStudio 6.8 is the last word. (Otherwise it would be called 7.0.) In fact, precisely because implementation is very hard, a number of details are still not properly handled; for example, as discussed in recent exchanges on the EiffelStudio user group [13], just printing out the contents of a separate string is non-trivial. We are working to provide all the machinery that will make everything work well, the ambitious goals and the practical details. But the basics of the mechanism are there, with a solid implementation designed to scale properly for large applications and in distributed settings.

In next week’s article I will describe in a bit more detail what makes up the SCOOP mechanisms. To get a preview, you are welcome to look at the documentation [14, 15]; I hope it will convince you that despite what everyone else says concurrent programming can be easy.

References

[1] Official Intel statement, see e.g. here.

[2] Rich Rashid, Microsoft Faculty Summit, 2008.

[3] This statement was cited at the Microsoft Faculty Summit in 2008 and is part of the official transcript; hence it can be assumed to be authentic, although I do not know the original source.

[4] Patterson and Bell citations from John Markoff, Faster Chips Are Leaving Programmers in Their Dust, New York Times, 17 December 2007, available here.

[5] The chart is from the course material of Tryggve Fossum at the LASER summer school in 2008.

[6] C.A.R. Hoare: em>Communicating Sequential Processes, Prentice Hall, 1985, also available online.

[7] Bill Roscoe: The Theory and Practice of Concurrency, revised edition, Prentice Hall, 2005, also available online.

[8] Robin Milner: Communication and Concurrency, Prentice Hall, 1989.

[9] Robin Milner: Communicating and Mobile Systems: The π-calculus, Cambridge University Press, 1999.

[10] Simon Peyton-Jones: Beautiful Concurrency, in Beautiful Code, ed. Greg Wilson, O’Reilly, 2007, also available online.

[11] Joe Armstrong: Erlang, in Communications of the ACM, vol. 53, no. 9, September 2010, pages 68-75.

[12] Bertrand Meyer: Software Architecture: Functional vs. Object-Oriented Design, in Beautiful Architecture, eds. Diomidis Spinellis and Georgios Gousios, O’Reilly, 2009, pages 315-348, available online.

[13] EiffelStudio user group; see here for a link to current discussions and to join the group.

[14] SCOOP project documentation at ETH, available here.

VN:F [1.9.10_1130]

Rating: 8.9/10 (8 votes cast)

VN:F [1.9.10_1130]

Rating: +6 (from 6 votes)

Informatics Europe

Informatics Europe LASER summer school

LASER summer school Propulsion Academy

Propulsion Academy