This week, Informatics Europe, the association of European computer science departments and industry research centers, is holding its annual ECSS event, bizarrely billed as “20 years of Informatics Europe”. (Informatics Europe was created at the end of 2006 and incorporated officially in 2011. The first ever mention of the name appeared in an email from Jan van Leeuwen to me with cc to Christine Choppy, received on 23 October 2006 at 21:37 — we were working late. Extract from Jan’s message: “The name `Informatics Europe’ has emerged as as a name that several people find appealing (and www.informatics-europe.org seems free).” So this year is at most the 18th anniversary.)

I would have liked to speak at this week’s event but was rejected, as explained at the end of this note. I am jotting down here a partial sketch of what I would have said, at least the introduction. (Engaging in a key-not since I was not granted a keynote.) Some of the underlying matters are of great importance and I hope to have the opportunity to talk or write about them in a more organized form in the future.

Informatics Europe came out of a need to support and unite Europe’s computer science (informatics) community. In October 2004 (funny how much seems to happen in October) Willy Zwaenepoel, chair of CS at EPFL (ETH Lausanne) wrote to me as the CS department head at ETH Zurich with an invitation to meet and discuss ways to work together towards making the discipline more visible in Switzerland. We met shortly thereafter, for a pleasant Sunday dinner on November 14. I liked his idea but suggested that any serious effort should happen at the European level rather than just Switzerland. We agreed to try to convince all the department heads that we could find across Europe and invite them to a first meeting. In the following weeks a frantic effort took place to identify, by going through university web sites and personal contacts, as many potential participants as possible. The meeting, dubbed ECSS for European Computer Science Summit, took place at ETH Zurich on (you almost guessed it) 20-21 October 2005. The call for participation started with:

The departments of computer science at EPF Lausanne and ETH Zurich are taking the initiative of a first meeting of heads of departments in Europe.

Until now there hadn’t been any effort, comparable to the Computing Research Association in the US with its annual “Snowbird” conference, to provide a forum where they could discuss these matters and coordinate their efforts. We feel it’s time to start.

The event triggered enormous enthusiasm and in the following years we created the association (first with another name, pretty ridiculous in retrospect, but fortunately Jan van Leeuwen intervened) and developed it. For many years the associated was hosted at ETH in my group, with a fantastic Executive Board (in particular its two initial vice presidents, Jan van Leeuwen and Christine Choppy) and a single employee (worth many), Cristina Pereira, who devoted an incredible amount of energy to develop services for the members, who are not individuals but organizations (university departments and industry research labs). One of the important benefits of the early years was to bring together academics from the Eastern and Western halves of the continent, the former having still recently emerged from communism and eager to make contacts with their peers from the West.

This short reminder is just to situate Informatics Europe for those who do not know about the organization. I will talk more about it at the end because the true subject of this note is not the institution but European computer science. The common concern of the founders was to bring the community together and enable it to speak with a single voice to advance the discipline. The opening paragraphs of a paper that Zwaenepoel and I published in Communications of the ACM to announce the effort (see here for the published version, or here for a longer one, pre-copy-editing) reflect this ambition:

Europe’s contribution to computer science, going back seventy years with Turing and Zuse, is extensive and prestigious; but the European computer science community is far from having achieved the same strength and unity as its American counterpart. On 20 and 21 October 2005, at ETH Zurich, the “European Computer Science Summit” brought together, for the first time, heads of computer science departments throughout Europe and its periphery. This landmark event was a joint undertaking of the CS departments of the two branches of the Swiss Federal Institute of Technology: EPFL (Lausanne) and ETH (Zurich).

.

The initiative attracted interest far beyond its original scope. Close to 100 people attended, representing most countries of the European Union, plus Switzerland, Turkey, Ukraine, Russia, Israel, a delegate from South Africa, and a representative of the ACM,

Russ Shackelford, from the US. Eastern Europe was well represented. The program consisted of two keynotes and a number of panels and workshops on such themes as research policy, curriculum harmonization, attracting students, teaching CS to non-CS students, existing national initiatives, and plans for a Europe-wide organization. The reason our original call for participation attracted such immediate and widespread interest is that computer science in Europe faces a unique set of challenges as well as opportunities. There were dozens of emails in the style “It’s high time someone took such an initiative”; at the conference itself, the collective feeling of a major crystallizing event was palpable.

.

The challenges include some old and some new. Among the old, the fragmentation of Europe and its much treasured cultural diversity have their counterparts in the organization of the educational and research systems. To take just three examples from the education side, the UK has a system that in many ways resembles the US standard, although with significant differences (3- rather than 4-year bachelor’s degree, different hierarchy of academic personnel with fewer professors and more lecturers); German universities have for a long time relied on a long (9-semester) first degree, the “Diplom”; and France has a dual system of “Grandes Écoles”, engineering schools, some very prestigious and highly competitive, but stopping at a Master’s-level engineering degree, and universities with yet another sequence of degrees including a doctorate.

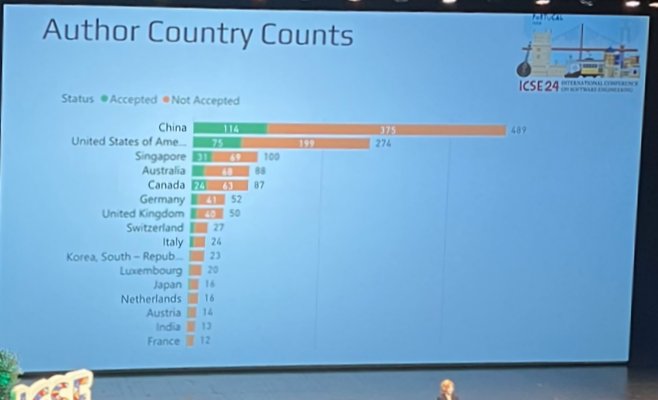

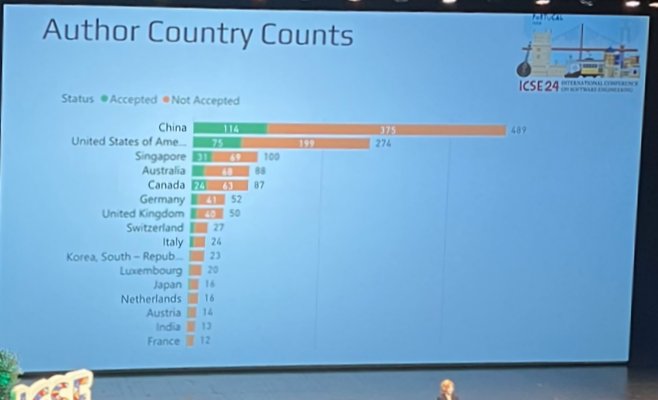

And so on. The immediate concerns in 2024 are different (Bologna adoption woes are a thing of the past) but the basic conundrum remains: the incredible amount of talent and creativity present in Europe remains dormant; research in academia (and industry) fails to deliver anywhere close to its potential. The signs are everywhere; as this note is only a sketch let me just mention a handful. The following picture shows the provenance of papers in this year’s International Conference on Software Engineering (ICSE), the premier event in the field. Even if you cannot read all the details (it’s a photo taken quickly from a back row in the opening session, sorry for the bad quality), the basic message is unmistakable: all China, the US, then some papers from Singapore, Australia and Canada. A handful from Germany and Switzerland, not a single accepted paper from France! In a discipline that is crucial for the future of every European nation.

Venture capital? There is a bit more than twenty years ago, but it is still limited, avaricious and scared of risks. Government support? Horizon and other EU projects have helped many, with ERC grants in particular (a brilliant European exclusive) leading to spectacular successes, but the bulk of the funding is unbelievably bureaucratic, forcing marriages of reason between institutions that have nothing in common (other than the hope of getting some monies from Brussels) and feeding a whole industry of go-between companies which claim to help applicants but contribute exactly zero to science and innovation. They have also had the perverse effect of limiting national sources of funding. (In one national research agency on whose evaluation committee I sat, the acceptance rate is 11%. In another, where I recently was on the expert panel, it’s more like 8%. Such institutions are the main source of non-EU research funding in their respective countries.)

The result? Far less innovation than we deserve and a brain drain that every year gets worse. Some successes do occur, and we like to root for Dassault, SAP, Amadeus and more recently companies like Mistral, but almost all of the top names in technology — like them or loathe them — are US-based (except for their Chinese counterparts): Amazon, Microsoft, Google, OpenAI, Apple, Meta, X, or (to name another software company) Tesla. They benefit from European talent and European education: some have key research centers in Europe, and all have European engineers and researchers. So do non-European universities; not a few of the ICSE papers labelled above as “American” or “Canadian” are actually by European authors. Talk to a brilliant young researcher or bright-eyed entrepreneur in Europe: in most cases, you will hear that he wants to find a position or create a company in the US, because that is where the action is.

Let me illustrate the situation with a vivid example. In honor of Niklaus Wirth’s 80th birthday I co-organized a conference in 2014 where at the break a few of us were chatting with one of the speakers, Vint Cerf. Someone asked him a question which was popping up everywhere at that time, right in the middle of the Snowden affair: “if you were a sysadmin for a government organization, would you buy a Huawei router?”. Cerf’s answer was remarkable: I don’t know, he said, but there is one thing I do not understand: why in the world doesn’t Europe develop its own cloud solution? So honest, coming from an American — a Vice President at Google! — and so true. So true today still: we are all putting all our data on Amazon’s AWS and Cerf’s employer’s Google Cloud and IBM Cloud and Microsoft Azure. Total madness. (A recent phenomenon that appears even worse is something I have seen happening at European university after university: relinquishing email and other fundamental solutions to Microsoft! More and more of us now have our professional emails at outlook.com. Even aside from the technical issues, such en-masse surrender is demented.) Is Europe so poor or so retarded that it cannot build local cloud or email solutions? Of course not. In fact, some of the concepts were invented here!

This inability to deliver on our science and technology potential is one of the major obstacles to social and economic improvement in Europe. (Case in point: there is an almost one-to-one correspondence between the small set of countries that are doing better economically than the rest of the Europe, often much better, and the small set of countries that take education and science seriously, giving them enough money and freeing them from overreaching bureaucracy. Did I mention Switzerland?) The brain drain should be a major source of worry; some degree of it is of course normal — enterprising people move around, and there are objective reasons for the magnetic attraction of the US — but the phenomenon is dangerously growing and is too unidirectional. Europe should offer its best and brightest a local choice commensurate with the remote one.

Many cases seem to suggest that Europe has simply given up on its ambitions. One specific example — academia-related but important — adds to the concerns raised apropos ICSE above. With a group of software engineering pioneers from across Europe (including some who would later help with Informatics Europe) we started the European Software Engineering Conference in 1987. I was the chair of the first conference, in Strasbourg that year, and the chair of the original steering committee for the following years (I later organized the 2013 session). The conference blossomed, reflecting the vibrant life of the European software engineering community, and open of course to researchers from all over the world. (The keynote speaker in Strasbourg was David Parnas, who joked that we had invited him, an American, because the French and Germans would never agree to a speaker from the other country. That quip was perhaps funny but as unfair as it was wrong: founders from different countries, notably including Italy and Belgium, even the UK, were working together in a respectful and friendly way without any national preferences.) Having done my job I stepped aside but was flabbergasted to learn some years later that ESEC had attached itself to a US-based event, FSE (the symposium on Foundations of Software Engineering). The inevitable and predictable happened: FSE was supposed to be ESEC-FSE every other year, but soon that practice fell out and now ESEC is no more. FSE is not the culprit here: it’s an excellent conference (I had a paper in the last edition), it is just not European. My blood boils each time I think about how the people who should have nurtured and developed ESEC, the result of many years of discussions and of excellent Europe-wide cooperation, betrayed their mission and let the whole thing disappear. Pathetic and stupid, and terrible for Europe, which no longer has an international conference in this fundamental area of modern technology.

The ESEC story helps think about the inevitable question: who is responsible? Governments are not blameless; they are good at speeches but less at execution. When they do intervene, it’s often with haste (reacting to hype with pharaonic projects that burn heaps of money before running out of favor and delivering nothing). In France, the tendency is sometimes to let the state undertake technical projects that it cannot handle; the recipes that led to the TGV or Ariane do not necessarily work for IT. (A 2006 example was an attempt to create a homegrown search engine, which lasted just long enough to elicit stinging mockery in the Wall Street Journal, “Le Google”, unfortunately behind a paywall.)

It is too easy, however, to cast all the blame on outsiders. Perhaps the most important message that I would have wanted to convey to the department heads, deans, rectors and other academic decision-makers attending ECSS this week is that we should stop looking elsewhere and start working on the problems for which we are responsible. Academia is largely self-governed. Even in centralized countries where many decisions are made at the national level in ministries, the staff in those ministries largely consists of academics on secondment to the administration. European academia — except in the more successful countries, already alluded to, and by the way not exempt either from some of the problems of their neighbors — is suffocating under the weight of absurd rules. It is fashionable to complain about the bureaucracy, but many of the people complaining have the power to make and change these rules.

The absurdities are everywhere. In country A, a PhD must take exactly three years. (Oh yes? I thought it was the result that mattered.) By the way, if you have funding for 2.5 years, you cannot hire a PhD student (you say you will find the remaining funding in due time? What? You mean you are taking a risk?) In country B, you cannot be in the thesis committee of the student you supervised. (This is something bequeathed from the British system. After Brexit!) Countries C, D, E and F (with probably G, H, I, J and K to follow) have adopted the horrendous German idea of a “habilitation”, a second doctorate-like process after the doctorate, a very effective form of infantilization which maintains scientists in a subservient state until their late thirties, preventing them during their most productive years from devoting their energy to actual work. Universities everywhere subject each other to endless evaluation schemes in which no one cares about what you actually do in education and research but the game is about writing endless holier-than-thou dissertations on inclusiveness, equality etc. with no connection to any actual practice. In country L, politicized unions are represented in all the decision-making bodies and impose a political agenda, censoring important areas of research and skewing scientist hires on the basis of political preferences. In country M, there is a rule for every elementary event of academic life and the rule suffers no exception (even when you discover that it was made up two weeks earlier with the express goal of preventing you from doing something sensible). In country N, students who fail an exam have the right to a retake, and then a second retake, and then a third retake, in oral form of course. In country O, where all university presidents make constant speeches about the benefits of multidisciplinarity, a student passionate about robotics but with a degree in mechanical engineering cannot enroll in a master degree in robotics in the computer science department. In country P (and Q and R and S and T) students and instructors alike must, for any step of academic life, struggle with a poorly designed IT system, to which there is no alternative. In country U, expenses for scientific conferences are reimbursed six months later, when not rejected as non-conformant. In country V, researchers and educators are hired through a protracted committee process which succeeds in weeding out candidates with an original profile. In country W, the primer criterion for hiring researchers is the H-index. In country X, it is the number of publications. In country Y, management looks at your research topics and forces you to change them every five years. I would need other alphabets but could go on.

When we complain about the difficulties to get things done, we are very much like the hero of Kafka’s Before the Law, who grows old waiting in front of a gate, only to learn in his final moments that he could just have entered by pushing it. We need to push the gate of European academia. No one but we ourselves is blocking it. Start by simplifying everything, but there are more ways to enter; they are what I would have liked to present at ECSS and will have to wait for another day.

Which brings me back to the ECSS conference. I wrote to its organizers asking for the opportunity to give a talk. Naïvely, I thought the request would be obvious. After all, while Informatics Europe was at every step a group effort, with an outstanding group of colleagues from across Europe (I mentioned a few at the beginning, but there were many more, including all the members of the initial Executive Board), I played the key role as one of the two initiators of the idea, the organizer of the initial meeting and several of the following ECSS, the founding president for two terms (8 years), the prime writer of the foundational documents, the host of the first secretariat for many years in my ETH chair, the lead author of several reports, the marketer recruiting members, and the jack-of-all-trades for Informatics Europe. It may be exaggerated to say that for the first few years I carried the organization on my shoulders, but it is a fact that I found the generous funding (from ETH, industry partners and EPFL thanks to Zwaenepoel) that enabled us to get started and enabled me, when I passed the baton to my successor, to give him an organization in a sound financial situation, some 80 due-paying members, and a strong record of achievements. Is it outrageous, after two decades, to ask for a microphone to talk about the future for 45 minutes? The response I got from the Informatics Europe management was as surprising as it was boorish: in our program (they said in February 2024!) there is no place left. To add injury to insult they added that if I really wanted I could participate in some kind of panel discussion. (Sure, fly to Malta in the middle of the semester, cancel 4 classes and meetings, miss paper deadlines, all for 5 minutes of trying to put in a couple of words. By the way, one of the principles we had for the organization of ECSS was always to be in a big city with an important local community and an airport with lots of good connections to the principal places in Europe — and beyond for our US guests.) When people inherit a well-functioning organization, the result of hard work by a succession of predecessors, it is hard to imagine what pleasure they can take in telling them to go to hell. Pretty sick.

For me Informatics Europe was the application to my professional life of what remains a political passion: a passion for Europe and democracy. On this same blog in 2012 I published an article entitled “The most beautiful monument of Europe”, a vibrant hymn to the European project. While I know that some of it may appear naïve or even ridiculous, I still adhere to everything it says and I believe it is worth reading. While I have not followed the details of the activities of Informatics Europe since I stopped my direct involvement, I am saddened not to see any trace of European sentiment in it. We used to have Ukrainian members, from Odessa Polytechnic, who participated in the first ECSS meetings; today there is no member from Ukraine listed. One would expect to see prominent words of solidarity with the country, which is defending our European values, including academic ones. Is that another sign of capitulation?

I am also surprised to see few new in-depth reports. Our friends from the US Computing Research Association, who were very helpful at the beginning of Informatics Europe (they included in particular Andy Bernat and Ed Laszowka, and Willy Zwaenepoel himself who had been a CRA officer during his years in the US), told us that one of the keys to success was to provide the community with factual information. Armed with that advice, we embarked on successive iterations of the “Informatics in Europe: Key Data” reports, largely due to the exhaustive work of Cristina Pereira, which provided unique data on salaries (something that we often do not discuss in Europe, but it is important to know how much a PhD student, postdoc, assistant professor of full professor makes in every surveyed country), student numbers, degrees, gender representation etc. etc., with the distinctive quality that — at Cristina’s insistence —we favored exactness over coverage: we included only the countries for which we could get reliable data, but for those we guaranteed full correctness and accuracy. From the Web site it seems these reports — which indeed required a lot of effort, but are they not the kind of thing the membership expects? — were discontinued some years ago. While the site shows some other interesting publications (“recommendations”), it seems regrettable to walk away from hard foundational work.

New management is entitled to its choices (as previous management is entitled to raise concerns). Beyond such differences of appreciation, the challenges facing European computer science are formidable. The enemies are outside, but they are also in ourselves. The people in charge are asleep at the wheel. I regret not to have had the opportunity to try to wake them up in person, but I do hope for a collective jolt to enable our discipline to bring Europe the informatics benefits Europe deserves.